Kubernetes

Introduction to Kubernetes

A practical introduction to Kubernetes

Prerequisites

- Have Docker installed - Link

- Have a local Kubernetes cluster running (Docker Desktop or Kind) - Link

- Have VScode installed (Optional) - Link

What you will learn today

- What kubernetes is and what it solves

- Kubernetes Basic concepts

- How to deploy your docker images

- How to run Jobs

- How to use secrets

- How to use volumes

What Kubernetes is?

Kubernetes is an open source container orchestrator. It automates configuration, deployment, scaling of containers in a simple and declarative way.

Kubernetes Main Features

- Service Discovery and Load Balancing

- Automated rollouts and rollbacks

- Self-healing

- Secret and configuration management

- Horizontal Scaling

- Designed for extensibility

Pods

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

A Pod is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers.

Specifications

This is a simple Pod specification:

apiVersion: v1 # Tells Kubernetes what api version to use

kind: Pod # Tells kubernetes what type of resource to create

metadata:

name: nginx # Add a name to the pod

labels:

app: nginx # Add a label to this pod. It can be used to find pods easier

spec:

containers: # Describes the containers in this pod

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

Main Commands

kubectl apply -f [filename] # creates file contents

kubectl get pods # Gets jobs

kubectl port-forward [resourceType]/[resourceName] [hostPort]:[resourcePort]

kubectl logs [pod name] -c [container name (optional)] # Get logs of a pod

kubectl delete [resourceType] [resourceName]

Hands-on

Now try to run our Postgres database on a Pod and connect to it:

apiVersion: v1

kind: Pod

metadata:

name: postgres

labels:

name: postgres

spec:

containers:

- name: postgres

image: postgres:14

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: rn6yBZ1QG7x1asvBRzaX

- name: POSTGRES_DB

value: demo

Deployments

Deployments allow Kubernetes to create and manage replicas of pods, allowing you to scale the application automatically and self-heal the application in case of failure.

While pods are interesting and one of the foundations of Kubernetes they work more often than not only as building blocks to other more complex solutions.

Deploying frontend

apiVersion: apps/v1 # A different API version than for pods

kind: Deployment

metadata:

name: frontend # the name will serve as prefix for pods created eg. frontend-dashjkf

spec:

replicas: 3 # Desired number of replicas

selector: # Selector that tells the deployment which pods belong to it

matchLabels:

app: frontend

template: # Template for pods

metadata:

labels:

app: frontend

spec:

containers:

- name: demo

image: rodsantos/demo-frontend:latest

env: # Environment variables

- name: FOO

value: bar

ports:

- containerPort: 3000

Main Commands

kubectl apply -f [filename] # creates file contents

kubectl get deployments # Gets jobs

kubectl get pods # Gets pods

kubectl port-forward [resourceType]/[resourceName] [hostPort]:[resourcePort]

kubectl delete pod [resourceName]

kubectl delete deployment [resourceName]

Hands-on

Now it is your turn to create a deployment for the image rodsantos/demo-backend-application 🚀

Hint:

- name: DATABASE_URL # Composing env variable

value: postgres://$(POSTGRES_USER):$(POSTGRES_PASSWORD)@$(POSTGRES_SERVICE):$(POSTGRES_PORT)/$(POSTGRES_DB)

Hands-on

The deployment would look like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

spec:

replicas: 3

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: demo

image: rodsantos/demo-backend-application:latest

env:

- name: POSTGRES_PASSWORD

value: rn6yBZ1QG7x1asvBRzaX

- name: POSTGRES_DB

value: demo

- name: POSTGRES_USER

value: postgres

- name: POSTGRES_SERVICE

value: postgres-service

- name: POSTGRES_PORT

value: "80"

- name: DATABASE_URL # Composing env variable

value: postgres://$(POSTGRES_USER):$(POSTGRES_PASSWORD)@$(POSTGRES_SERVICE):$(POSTGRES_PORT)/$(POSTGRES_DB)

ports:

- containerPort: 4000

Services

Now that we have multiple pods running we need to have a way to distribute the request between them services serve this purpose.

They act as a load balancer and they know which pods to send the request by using a selector.

Specification

apiVersion: v1

kind: Service

metadata:

name: postgres-service

spec:

selector:

name: postgres

ports:

- protocol: TCP

port: 80

targetPort: 5432

Specification

apiVersion: v1

kind: Service

metadata:

name: frontend-service # service name

spec:

selector:

app: frontend # job label it will search for

ports:

- protocol: TCP

port: 80 # Port exposed by the service

targetPort: 3000 # Pod Port

Hands-on

Now it is your chance to create a service for your backend deployment 🚀

apiVersion: v1

kind: Service

metadata:

name: backend-service # service name

spec:

selector:

app: backend # job label it will search for

ports:

- protocol: TCP

port: 80 # Port exposed by the service

targetPort: 4000 # Pod Port

Secrets

So far we have been copying and pasting the db password every time we need to use it, but this is not secure or practical.

Secrets allow you to store sensitive values and to consume them in other Kubernetes objects such as Pods, Deployments or Jobs.

Creating secrets

apiVersion: v1

kind: Secret

metadata:

name: secret_name

data:

mysecret: YWRtaW4= # secret on base 64

### Consuming secrets

env:

- name: MYSQL_USER # env Variable name

valueFrom:

secretKeyRef:

name: secret_name # secret name

key: mysecret # key on file

Hands-on

Now it is your chance to create a secret for your database 🚀

Hint:

echo 'My secret password' | base64 # Encrypts value to base 64

Hands-on

Secret:

apiVersion: v1

kind: Secret

metadata:

name: postgres

data:

password: cm42eUJaMVFHN3gxYXN2QlJ6YVgK # secret on base 64

Deployment:

#...

env: # Environment variables

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres

key: password

#...

Jobs

Kubernetes allow you to create jobs or cronjobs that will create a pod and exit after finishing the task.

Jobs have built-in functionality to retry in case of failure.

Applying this configuration:

apiVersion: batch/v1

kind: Job

metadata:

name: migration

spec:

backoffLimit: 4 # retry limit

template: # Template for the job that will be created

spec:

containers:

- name: migration

image: rodsantos/demo-migration:latest

env:

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres

key: password

- name: POSTGRES_DB

value: demo

- name: POSTGRES_USER

value: postgres

- name: POSTGRES_SERVICE

value: postgres-service

- name: POSTGRES_PORT

value: "80"

- name: DATABASE_URL

value: postgres://$(POSTGRES_USER):$(POSTGRES_PASSWORD)@$(POSTGRES_SERVICE):$(POSTGRES_PORT)/$(POSTGRES_DB)

restartPolicy: Never

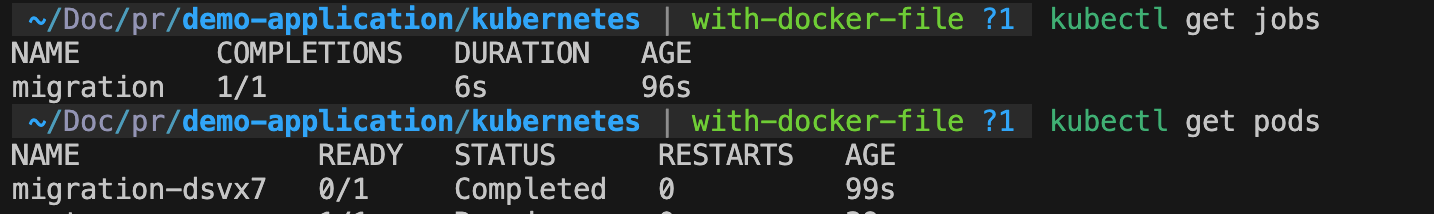

Will result into:

Volumes

If you turn delete the Postgres instance you will notice that when you create it again all data that we saved is gone. For obvious issues this is not desired in a database, so what causes this?

Docker images are immutable by nature and container have only a temporary layer of storage. If a container store data on disk and it is deleted the data stored will be gone.

Volumes

Volumes are the mechanism that can prevent this from happening. A volume mounts an external data storage to your image, so when you store data there it will be persisted even when the container is deleted!

Volumes

This is a basic example of a Volume:

# ...

spec:

containers:

- name: postgres

image: postgres:14

# ...

volumeMounts: # Mounts a volume to a container

- mountPath: /var/lib/postgresql/data # tells the path it should be mounted

name: storage # describes which store should be used

volumes:

- name: storage # name of the volume

hostPath: # volume type that uses host to store files

path: /tmp/volume # host path

type: DirectoryOrCreate # path type

What was not covered

- HPA (Horizontal Pod Autoscaling) - Link

- CRD (Custom Resource Definition) - Link

- Helm Charts - Link

- Probes - Link

- Namespaces - Link

FAQ

-

Is there a better way to expose pods to external requests? - Yes. Ingress allow incoming requests from external sources and allow things like nginx configuration, path to service mapping and canary startegy.

-

Is there any way to automate scaling? - Yes. HPA (Horizontal Pod Autoscaling) can deploy more pods based on things like CPU/Memory.

-

Writing configuration files seems a bit repetitive if there are different environments. Is there a way to improve this? - Yes. Helm Charts allow you to create a "package" that contains configuration for several resources and allow dynamic configuration using variables, conditions and loops.