Terraform Certification (Terraform Associate (003))

Test Details

| Description | Detail |

|---|---|

| Duration | 1 Hour |

| Multiple Choice | 50-60 |

| Location | Online |

| Expiration | 2 Years |

| Min Grade | 70% |

IaC and its Benefits

IaC is a short term for Infrastructure as code. It allows you to write down whatever you want to be deployed as code. This strategy allows you to create resources in a automated and predictable way reducing errors and keeping history of changes.

Terraform Characteristics

Terraform uses a language called HCL (Hashicorp Configuration Language) declare IaC, it is platform agnostic allowing you to deploy to most of big cloud providers and even to deploy to multiple cloud providers, terraform tracks the state of each resource deployed and gets them to your desired state.

Terraform Workflow

There are 3 major steps in the terraform workflow:

- Write - You write your terraform code and store it somewhere (eg. Github)

- Plan - You use the plan command to review the changes that will be applied to your infrastructure

- Apply - Deploying the changes you made, provisioning real infrastructure

Terraform main commands

Terraform Init

terraform init initializes the working directory that contains your Terraform code. It follows this steps:

- Downloads components - Download modules and plugins (eg. providers and modules)

- Sets up backend - Sets up backend that will store Terraform state file.

This is a command that should be executed when you start a terraform or when you add/update providers or modules.

The downloaded providers are binaries that execute API calls to vendors, those API calls are abstracted away by terraform.

To change the path where the plugins are downloaded you can add the flag -plugin-dir, passing the path to the directory where the plugins will be saved.

There are a couple of flags that can be interesting:

- -lock=false Disable lock state of files during state-related operations

- -upgrade - Opt to upgrate modules and plugins

- -backend=false - skips backend configuration

- -backend-config= - Can be used for partial backend configuration in cases that the backend is dynamic

Terraform Plan

terraform plan reads the code, creates and shows a plan of the execution/deployment. It does API calls to vendors, but only fetches information.

The plan allows the user to review the action plan before executing anything.

Since it needs to call vendors it also needs to use authentication credentials to connect to them.

Terraform Apply

terraform apply deploys the instructions and statements in the code provisioning the infrastructure specified.

It updates the deployment state tracking file.

It is possible to only destroy infra by adding the flag -destroy

It is possible to only apply changes to a resource by adding the flag -target

Terraform Destroy

terraform destroy is about deprovisioning infrastructure. It looks at the recorded state file created during terraform apply and deprovisions all resources created by it.

This command should be used with caution as it is a non-reversible command. Take backups and be sure that you want to delete infrastructure.

Terraform Providers

Providers are terraform's way of abstracting integrations with API control layer of the infrastructure vendors, they can only be written in Go lang and when you run terraform init only its binary is downloaded.

Terraform finds and install providers when initialising working directory (terraform init), by default, looks for providers in Terraform providers registry/

Providers are plugins. They are released on a different pace from Terraform itself, and each provider has its own series of version numbers, so as a best practice providers should have a specific version.

Configuring providers

# provider is a reserved keyword

# aws tells the name of the provider that will be fetched on public terraform registry

provider "aws" {

region = "us-east-1" # this allows you to pass configuration parameters

}

Another example:

providers "google" {

credentials = file("credentials.json")

project = "my-project"

region = "us-west-1"

}

Important - You can use the property source to interact with plugins/providers and modules that are stored on git repositories, terraform registry, local files, http. s3, subdirectories and GCS.

Declaring Resources

# resource is a reserved keyword

# Then you have the resource type

# At last you have an alias that you give to this resource

resource "aws_instance" "web" {

ami = "ami-a1b2c3d4"

instance_type = "t2.micro"

}

To access the values you follow this pattern [resource-type].[resource-alias].[resource_property]

Querying Resources

# data is a reserved keyword

# Then you have the resource type

# At last you have an alias that you give to this resource

data "aws_instance" "my-vm" {

instance_id = "i1234567890" # What will be queried, this will vary depending on the resource type

}

To access the values you follow this pattern data.[resource-type].[resource-alias].[resource_property]

- Terraform executes code in files with the tf extension

- Terraform looks for providers in terraform public registry but also allow you to create your own private registry

The following data can be used to fetch outputs from other states.

data "terraform_remote_state" "remote_state" {

backend = "remote"

config = {

organization = "hashicorp"

workspaces = {

name = "vpc-prod"

}

}

}

Terraform State

Terraform state is a mechanism that terraform uses to know what has been deployed and it is referred back when executing commands so it can know what to create, edit or delete.

It is a JSON dump containing all the metadata about your Terraform deployment as well as details about all the resources that it has deployed. It is named terraform.tfstate by default and stored in the same directory where your terraform code is, but for better integrity and availability it can also be stored remotely, by using the backend feature.

Terraform Variables

# variable is a reserved keyword

# then comes the name of the variable

variable "my-var" {

# all the properties are optional

description = "My test variable"

type = string

default = "Hello"

sensitive = true # hides the value from the output

}

To reference a variable use the following structure var.[variable-name]

Variables can be defined anywhere but as a best practice they should be stored on terraform.tfvars

Precedence - The lowest precedence is environment variables, terraform.tfvars, terraform.tfvars.json, .auto.tfvars and last -var and -var-file.

Environment variables are defined following the pattern TF_VAR_[variable_name]

Variable Validation

variable "my-var" {

# all the properties are optional

description = "My test variable"

type = string

default = "Hello"

validation {

condition = length(var.my-var) > 4

error_message = "The string must be more than 4 characters"

}

}

Variable types

The base types are: string, number, bool

The complex types are list, set, map, object, tuple

List, set and map are collections and only hold a type

Object tuple and set are structural types and can hold multiple types

There is any type.

variable "availability_zone_names" {

type = list(string)

default = ["us-west-1a"]

}

variable "docker_port" {

type = object({

internal = number

external = number

protocol = string

})

default = {

internal = 8300

external = 8300

protocol = "tcp"

}

}

Important - Terraform will try to convert the values passed to an string variable, so 102, "102" and potato are valid values (if terraform is able to convert)

Terraform Outputs

output "instance_ip" {

description = "VM's private IP"

sensitive = false

value = aws_instance.my-vm.private_ip # The only config parameter required

}

Output variable values are shown on the shell after running terraform apply.

Terraform Provisioner

Mechanism that provides terraform a way of running custom scripts, commands or actions.

You can either run it locally (where terraform commands are being executed), or remotely on resources that spun up through the terraform deployment.

Within Terraform code, each individual resource can have its own provisioner defining the connection method (eg. ssh, WimRM) and the actions/commands or script to execute.

There are 2 types of provisioners: "Creation-time" and "Destroy-time"

It should be a last resource, and terraform state does not track it.

Provisioners are recommended for use when you want to invoke actions not covered by terraform declarative mode.

If the command within a provisioner returns a non-zero code, it is considered failed and the resource will be tained (marked for deletion and recreation on next apply)

resource "null_resource" "dummy_resource" {

# will return when created

provisioner "local-exec" {

command = "echo '0' > status.txt"

}

provisioner "local-exec" {

when = destroy

command = "echo '1' > status.txt"

}

}

You can self reference the resource by using self variable

resource "aws_instance" "webserver" {

ami = data.aws_ssm_parameter.webserver-ami.value

instance_type = "t3.micro"

key_name = aws_key_pair.webserver-key.key_name

associate_public_ip_address = true

vpc_security_group_ids = [aws_security_group.sg.id]

subnet_id = aws_subnet.subnet.id

provisioner "remote-exec" {

inline = [

"sudo yum -y install httpd && sudo systemctl start httpd",

"echo '<h1><center>My Test Website With Help From Terraform Provisioner</center></h1>' > index.html",

"sudo mv index.html /var/www/html/"

]

connection {

type = "ssh"

user = "ec2-user"

private_key = file("~/.ssh/id_rsa")

host = self.public_ip

}

}

tags = {

Name = "webserver"

}

}

Terraform State

It maps real-world resources to resource defined in your terraform code or configuration.

By default it is stored locally in a file called terraform.tfstate, but it can be saved in other places.

Prior to any modification, terraform refreshes the state file.

Resource dependency metadata is also tracked via the state file

It caches resource attributes for subsequent use.

terraform state command is a utility form manipulating and reading the Terraform State file (advanced usecase)

You can manually remove a resource from terraform state file so it won't be managed by terraform anymore.

List tracked resources and their details

| Terraform State Subcommand | Use |

|---|---|

| terraform state list | List all resources tracked by the state file |

| terraform state rm | Deletes a resource from terraform state file |

| terraform state show [RESOURCE] | Show details of a resource tracked in the terraform state |

| terraform state push [path] | Updates local state to remote state |

Remote state storage

You can save the state to a remote data source such as AWS S3 or google storage.

This allows sharing state file between distributed teams

And it allows locking state so parallel executions don't coincide.

It enables sharing output values with other terraform configurations/code.

terraform {

backend "s3" {

profile = "demo"

region = "us-east-1"

key = "terraformstatefile"

bucket = "myawesomebucket2344"

}

}

Important: When using an S3 backend it is possible to add a prefix to your worflow state by adding the property workspace_key_prefix="prefix" the state will be saved then on s3://myawesomebucket2344/prefix/myapp-dev/terraform.tfstate

State Lock

If supported by your backend, Terraform will lock your state for all operations that could write state. This prevents others from acquiring the lock and potentially corrupting your state.

State locking happens automatically on all operations that could write state. You won't see any message that it is happening. If state locking fails, Terraform will not continue.

Important - You can disable state locking for most commands with the -lock flag but it is not recommended.

In case you want to remove the lock you can use the command terraform force-unlock [lock-id]

Terraform Module

It is just another folder or collection of terraform code. It groups multiple resources that are used together making code more reusable.

Every terraform configuration has at least one module, called the root module, which consists of code files in your main working directory.

Modules can be referenced from:

- Your local system:

module "name-of-the-module" {

source = "./modules/vpc" # mandatory

version = "0.0.5" # module version

region = var.region # input parameters

}

- Public registry

module "name-of-the-module" {

source = "hashicorp/consul/aws" # mandatory

version = "0.0.5" # module version

region = var.region # input parameters

}

- Private registry

module "name-of-the-module" {

source = "app.terraform.io/example-corp/k8s-cluster/azurerm" # mandatory

version = "0.0.5" # module version

region = var.region # input parameters

}

Other properties are:

count - Allows spawning multiple instances of the module resources

for_each - Allows you iterate over complex variables

providers - Allow you to set specific providers to module

depends_on - Adds explicit dependency to other resources

All outputs will be available for use by following this pattern module.[module-name].[output]

Best Practices

- By default modules should be named

terraform-[provider]-[name] - Versions are not required, but highly recommended.

- Module versions can be referenced by adding

?ref=[reference_name]which can be an version, commit or branch.

Terraform built-in functions

Terraform comes pre-packaged with functions to help you transform and combine values.

User defined functions are not allowed

You can check the functions here - Functions - Configuration Language | Terraform | HashiCorp Developer

Dynamic blocks

Iterates over a complex type variable and acts like a for loop and outputs a nested block foreach element in your variable. They can be used inside resource, data, provider and provisioner

resource "aws_elastic_beanstalk_environment" "tfenvtest" {

name = "tf-test-name"

application = "${aws_elastic_beanstalk_application.tftest.name}"

solution_stack_name = "64bit Amazon Linux 2018.03 v2.11.4 running Go 1.12.6"

dynamic "setting" {

for_each = var.settings

content {

namespace = setting.value["namespace"]

name = setting.value["name"]

value = setting.value["value"]

}

}

}

It is also possible to create an iterator to change the name of the variable of the block

resource "aws_elastic_beanstalk_environment" "tfenvtest" {

name = "tf-test-name"

application = "${aws_elastic_beanstalk_application.tftest.name}"

solution_stack_name = "64bit Amazon Linux 2018.03 v2.11.4 running Go 1.12.6"

dynamic "setting" {

for_each = var.settings

iterator = property

content {

namespace = property.namespace

name = property.name

value = property.value

}

}

}

Terraform CLI commands

terraform fmt - Format terraform code for readability for the current folder, unless the flag -recursive is passed. Only modifies the style of the code. You can prevent it from modifying the files by using the flag -check

[DEPRECATED] - terraform taint RESOURCE_ADDRESS - Taints a resource, forcing it to be destroyed and recreate. To do that, it modifies the state file and also may cause other resources to be modified. USE: terraform apply -replace="resource_type.resource"

terraform import RESOURCE_ADDRESS id - Maps existing resources to terraform using an id. The id depends on the underlying vendor. Importing the same resource to multiple terraform resources can cause unknown behaviour and it is not recommended.

Terraform configuration block

A special configuration block for controlling terraform behaviour. It only allows constant values.

terraform {

required_version = ">=0.13.0" # Requires terraform version

required_providers {

aws = ">= 3.0.0"

}

backend "s3" {

profile = "demo"

region = "us-east-1"

key = "terraformstatefile"

bucket = "myawesomebucket2344"

}

}

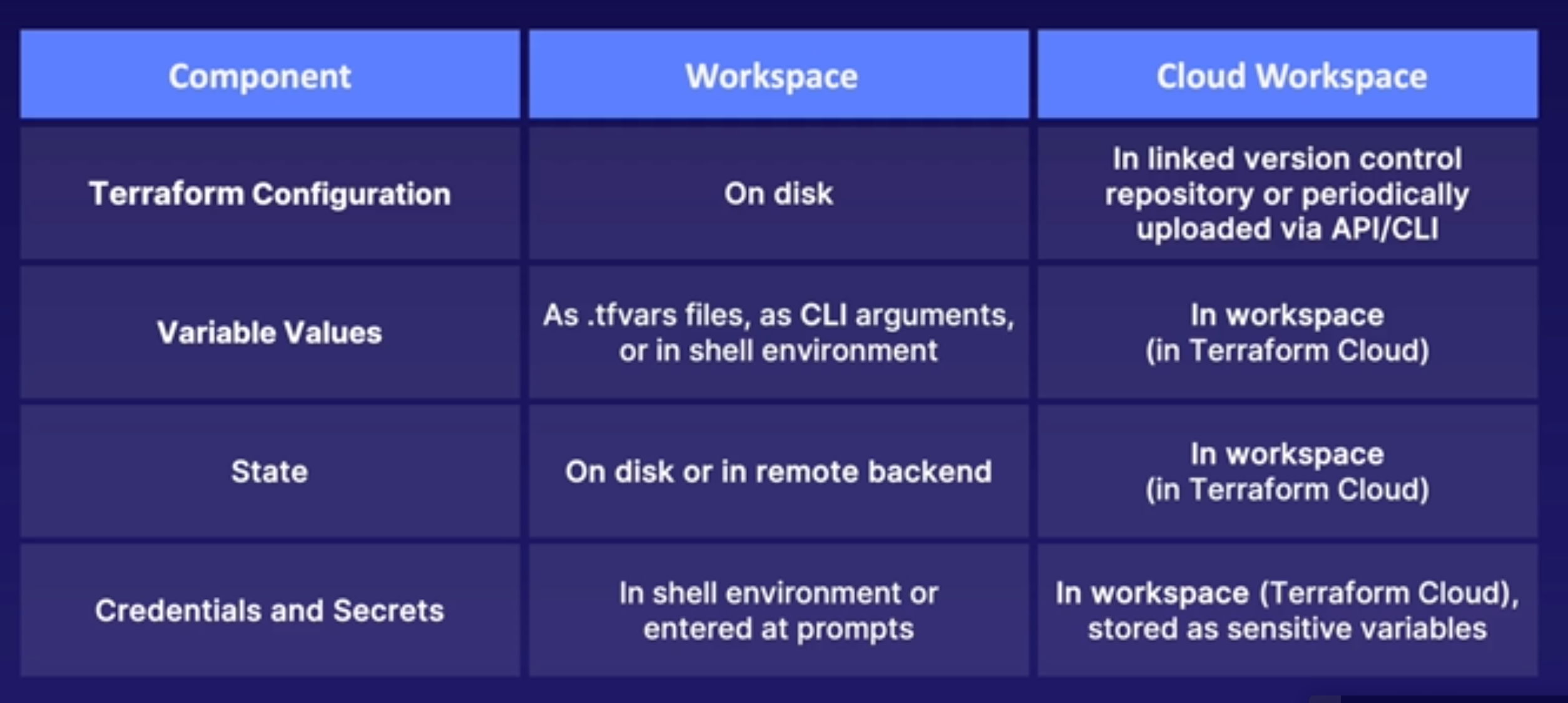

Terraform workspaces

Terraform workspaces are alternative state files within the same working directory, allowing different environments to be spawn.

The default namespace is called default and it cannot be deleted

| Command | Use |

|---|---|

| terraform workspace new [workspace-name] | Creates a workspace |

| terraform workspace select [workspace-name] | Select a workspace |

Access to a workspace name is provided through ${terraform.workspace} variable.

Important - The state of workspaces is stored on terraform.tfstate.d

Debugging terraform

TF_LOG is an environment variable for enabling verbose logging in Terraform. By default it send logs to stderr (standard error output)

Can be set to the following levels: TRACE, DEBUG, INFO, WARN, ERROR

With the trace level being the most verbose

You can redirect logs to a file by setting TF_LOG_PATH environment variable

Terraform Cloud

Terraform Sentinel - It is a framework that enforces adherence to policies on your terraform code.

It has its own policy language called sentinel language. It is executed between terraform plan and apply. You can define guardrails for automation, for example, preventing a dev to deploy to production. It allows testing and automation.

Use cases:

- Enforce CIS standards across AWS accounts.

- Ensure that only an specific type of instance is used

- Ensure that security groups will not allow traffic on port 22

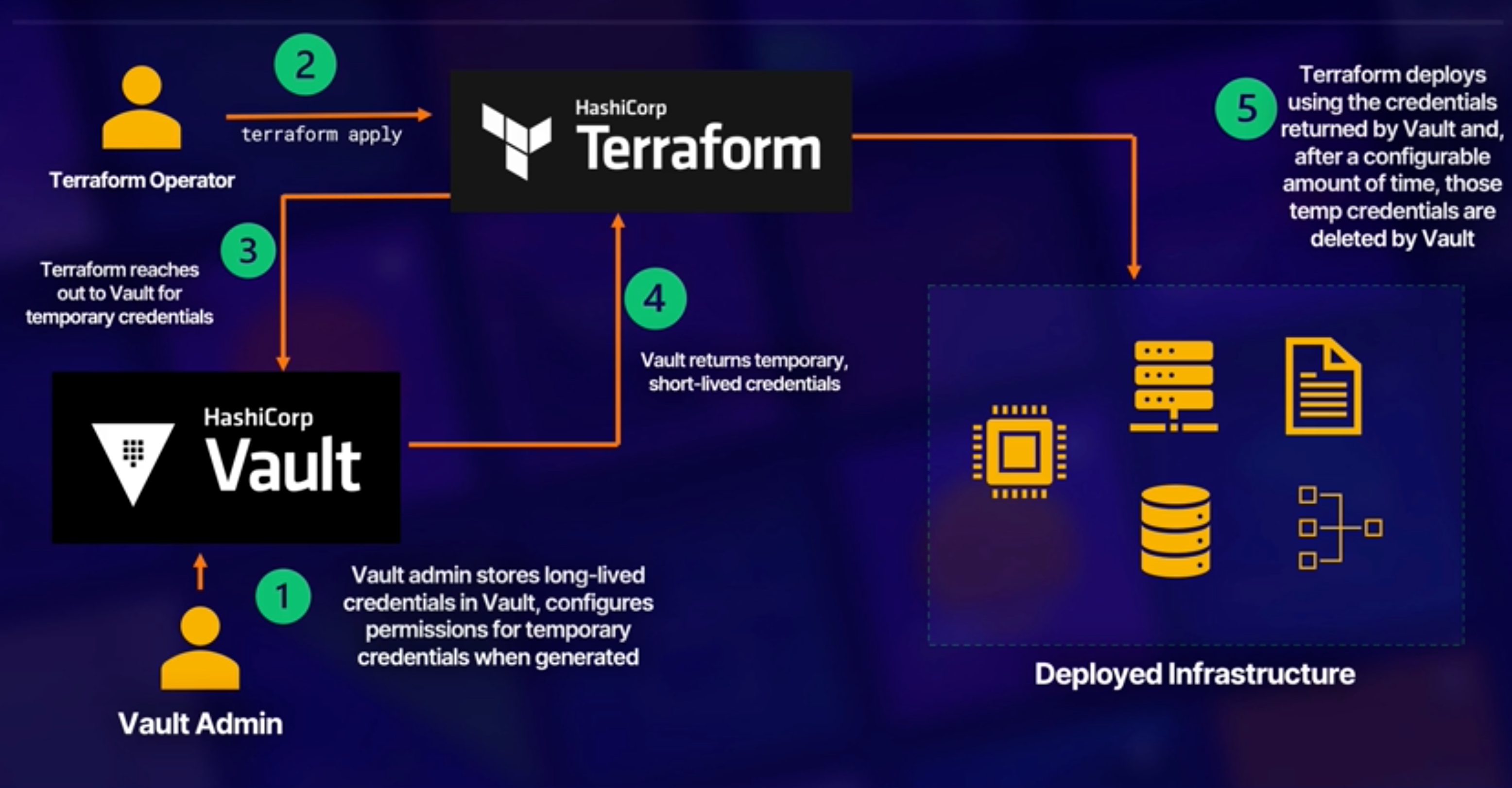

Terraform Vault - It is a secrets manager software that provide temporary credentials to users in place of actual long-lived credentials. It dynamically provisions credentials and rotates them. It encrypts sensitive data in transit and at rest, but not when executing, and provides fine-grained access to secrets using ACLs.

To consume the secrets you use vault provider.

Terraform Registry - You can contribute with other contributors to make changes to providers and modules

Terraform Cloud Workspaces - Works the same as workspaces locally but in the cloud. It maintains a record of all execution activity and all terraform commands are executed on managed terraform cloud vms

Terraform Cloud Private can connect to Github, BitBucket, Gitlab and azure devops.

Terraform parallelism is 10 by default

Terraform Backend types - enhanced and standard

- Enhanced backends can both store state and perform operations. There are only two enhanced backends: local and remote.

- Standard backends only store state and rely on the local backend for performing operations.